Installing orcharhino Server

This guide describes how to install orcharhino Server in a connected environment. You can install orcharhino Server on AlmaLinux 8, Oracle Linux 8, Red Hat Enterprise Linux 8, and Rocky Linux 8.

-

If you want to install orcharhino Server within your VMware vSphere environment, you can use the orcharhino OVA image to run orcharhino on AlmaLinux 8. This uses an open virtual appliance image (OVA) to create the orcharhino host in your VMware vSphere and automatically start the installation process.

-

If you want to provision a host to install orcharhino Server on, ATIX AG provides Kickstart files for AlmaLinux 8, Oracle Linux 8, and Rocky Linux 8. This works in virtually any scenario including bare-metal installations.

-

If you already have an existing host for orcharhino Server, start a manual installation.

|

orcharhino is available through a subscription model. Please contact us about getting access. |

Start your orcharhino Server installation process by carefully reading all prerequisites and requirements. If you want to deploy hosts into networks other than the one your orcharhino Server is in, you also need an orcharhino Proxy Server installed in each target network.

Prerequisites and System Requirements

|

ATIX AG recommends installing orcharhino Server on a virtual server. This allows you to create snapshot for backups among other advantages. Optionally, you can install orcharhino Server on a bare-metal system. |

Before you install orcharhino Server, ensure that your environment meets the following requirements:

-

A host as outlined in the system requirements.

-

A network infrastructure as outlined in the network requirements.

-

An orcharhino Subscription Key used to register your orcharhino Server with ATIX AG.

If you have an orcharhino subscription, you will receive your orcharhino Subscription Key and the required download links in your initial welcome email. If you have not received your welcome email, please contact us.

-

A browser on a secondary device that is able to resolve a route to your orcharhino Server.

-

A working internet connection, either directly or by using an HTTP/HTTPS proxy.

-

A unique host name which only contains lower-case letters, numbers, dots (

.), and hyphens (-).

|

ATIX AG does not support using third party repositories on your orcharhino Server or orcharhino Proxy Servers. Resolving package conflicts or other issues due to third party or custom repositories is not part of your orcharhino support subscription. Please contact us if you have any questions. |

System Requirements

The system must meet the following requirements, regardless of whether it is a virtual machine or a bare-metal server:

| Minimum | Recommended | |

|---|---|---|

OS |

AlmaLinux 8, Oracle Linux 8, Red Hat Enterprise Linux 8, or Rocky Linux 8 For more information, see OS requirements. |

|

CPU |

4 cores |

8 cores |

RAM |

20 GiB |

32 GiB |

HDD 1 ( |

30 GiB |

50 GiB |

HDD 2 ( |

~ 40 GiB for each Enterprise Linux distribution ~ 80 GiB for each Debian or Ubuntu distribution |

~ 500 GiB (or as appropriate) if you plan to maintain additional repositories or keep multiple versions of packages |

orcharhino Server requires two hard drives; one is used for the root partition and the other one for the data repositories. This separation is essential for the creation of snapshots and backups. The Kickstart files provided by ATIX AG do not work with a single drive system.

The main directories on /var are:

-

/var/cache/pulp/ -

/var/lib/pulp/ -

/var/lib/pgsql/

While it is technically possible to use different partitions for those directories, ATIX AG does not recommend doing so as it will negatively affect the overall performance of your orcharhino.

Using symbolic links is not an option as they break orcharhino-installer and corrupt the SELinux context if introduced at a later stage.

|

Ensure that you allocate sufficient hard drive resources at the beginning. Running out of space for your data repositories during regular orcharhino usage leads to significant pain. |

To create backups using orcharhino-maintain, which rely on LVM snapshots, ensure your LVM volume group where vg-data resides has more than 2 GiB free disk space available.

The free disk space is used during the creation of the snapshot to store all changes that are made to the database.

OS Requirements

You can install orcharhino Server on AlmaLinux 8, Oracle Linux 8, Red Hat Enterprise Linux 8, and Rocky Linux 8. Your orcharhino Subscription Key is specific to your chosen platform. After your orcharhino Server is registered with ATIX AG, it receives packages for orcharhino Server and for your respective platform directly from ATIX AG. ATIX AG updates the platform packages regularly.

The installation of orcharhino Server on Oracle Linux is only supported on the latest released Oracle Linux 8 minor version (8.Y). You can find the latest Oracle Linux 8.Y release on yum.oracle.com.

If you perform a manual orcharhino Server installation, you have to download the install_orcharhino.sh script and run it.

For more information, see Installing orcharhino Server Using the install_orcharhino.sh Script.

|

Ensure that you have the necessary Oracle Linux or Red Hat Enterprise Linux subscription if you want to install orcharhino Server on Oracle Linux or Red Hat Enterprise Linux. Your orcharhino subscription does not include any Oracle Linux or Red Hat Enterprise Linux subscriptions. Please contact us if you need help obtaining the relevant subscriptions or have questions on how to use your existing subscriptions. |

Network Requirements

|

orcharhino works best when it is allowed to manage the networks it deploys hosts to, which means that it acts as DHCP, DNS, and TFTP server for those networks. Allowing orcharhino to manage networks in this way is optional but should be considered when planning an orcharhino installation. Running two DHCP services in the same network causes networking issues. Please contact us if you have any questions. |

In order for orcharhino to manage hosts in one or more networks, it needs to be able to communicate with those hosts, possibly using an orcharhino Proxy Server. As a result, you need to open a set of ports to operate orcharhino on your network if you intend to use the corresponding service. There are generally three system types that need to connect to each other: orcharhino Server, orcharhino Proxies, and managed hosts.

| Port | Protocol | SSL | Required for |

|---|---|---|---|

8015 |

TCP |

no |

orcharhino installer GUI |

You can omit this using the --skip-gui option when running the install_orcharhino.sh script.

| Port | Protocol | SSL | Required for |

|---|---|---|---|

53 |

TCP & UDP |

no |

DNS Services |

67 |

UDP |

no |

DHCP Service |

69 |

UDP |

no |

PXE boot |

80 |

TCP |

no |

Anaconda, yum, templates, iPXE |

443 |

TCP |

yes |

Subscription Management, yum, Katello |

5000 |

TCP |

yes |

Katello for Docker registry |

8000 |

TCP |

yes |

Anaconda for downloading Kickstart templates, iPXE |

8140 |

TCP |

yes |

Puppet agent to Puppet master |

9090 |

TCP |

yes |

OpenSCAP reports |

| Port | Protocol | SSL | Required for |

|---|---|---|---|

53 |

TCP & UDP |

no |

DNS Services |

67 |

UDP |

no |

DHCP Service |

69 |

UDP |

no |

PXE boot |

80 |

TCP |

no |

Anaconda, yum, templates, iPXE |

443 |

TCP |

yes |

yum, Katello |

5000 |

TCP |

yes |

Katello for Docker registry |

8000 |

TCP |

yes |

Anaconda for downloading Kickstart templates, iPXE |

8140 |

TCP |

yes |

Puppet agent to Puppet master |

8443 |

TCP |

yes |

Subscription Management |

9090 |

TCP |

yes |

OpenSCAP reports |

Port and firewall requirements

For the components of orcharhino architecture to communicate, ensure that the required network ports are open and free on the base operating system. You must also ensure that the required network ports are open on any network-based firewalls.

Use this information to configure any network-based firewalls. Note that some cloud solutions must be specifically configured to allow communications between machines because they isolate machines similarly to network-based firewalls. If you use an application-based firewall, ensure that the application-based firewall permits all applications that are listed in the tables and known to your firewall. If possible, disable the application checking and allow open port communication based on the protocol.

orcharhino Server has an integrated orcharhino Proxy and any host that is directly connected to orcharhino Server is a Client of orcharhino in the context of this section. This includes the base operating system on which orcharhino Proxy Server is running.

Hosts which are clients of orcharhino Proxies, other than orcharhino’s integrated orcharhino Proxy, do not need access to orcharhino Server.

Required ports can change based on your configuration.

The following tables indicate the destination port and the direction of network traffic:

Destination Port |

Protocol |

Service |

Source |

Required For |

Description |

53 |

TCP and UDP |

DNS |

DNS Servers and clients |

Name resolution |

DNS (optional) |

67 |

UDP |

DHCP |

Client |

Dynamic IP |

DHCP (optional) |

69 |

UDP |

TFTP |

Client |

TFTP Server (optional) |

|

443 |

TCP |

HTTPS |

orcharhino Proxy |

orcharhino API |

Communication from orcharhino Proxy |

443, 80 |

TCP |

HTTPS, HTTP |

Client |

Global Registration |

Registering hosts to orcharhino Port 443 is required for registration initiation, uploading facts, and sending installed packages and traces Port 80 notifies orcharhino on the |

443 |

TCP |

HTTPS |

orcharhino |

Content Mirroring |

Management |

443 |

TCP |

HTTPS |

orcharhino |

orcharhino Proxy API |

Smart Proxy functionality |

443, 80 |

TCP |

HTTPS, HTTP |

orcharhino Proxy |

Content Retrieval |

Content |

443, 80 |

TCP |

HTTPS, HTTP |

Client |

Content Retrieval |

Content |

1883 |

TCP |

MQTT |

Client |

Pull based REX (optional) |

Content hosts for REX job notification (optional) |

5910 – 5930 |

TCP |

HTTPS |

Browsers |

Compute Resource’s virtual console |

|

8000 |

TCP |

HTTP |

Client |

Provisioning templates |

Template retrieval for client installers, iPXE or UEFI HTTP Boot |

8000 |

TCP |

HTTPS |

Client |

PXE Boot |

Installation |

8140 |

TCP |

HTTPS |

Client |

Puppet agent |

Client updates (optional) |

9090 |

TCP |

HTTPS |

orcharhino |

orcharhino Proxy API |

Smart Proxy functionality |

9090 |

TCP |

HTTPS |

Client |

OpenSCAP |

Configure Client (if the OpenSCAP plugin is installed) |

9090 |

TCP |

HTTPS |

Discovered Node |

Discovery |

Host discovery and provisioning (if the discovery plugin is installed) |

Any host that is directly connected to orcharhino Server is a client in this context because it is a client of the integrated orcharhino Proxy. This includes the base operating system on which a orcharhino Proxy Server is running.

A DHCP orcharhino Proxy performs ICMP ping or TCP echo connection attempts to hosts in subnets with DHCP IPAM set to find out if an IP address considered for use is free.

This behavior can be turned off using orcharhino-installer --foreman-proxy-dhcp-ping-free-ip=false.

|

Some outgoing traffic returns to orcharhino to enable internal communication and security operations. |

| Destination Port | Protocol | Service | Destination | Required For | Description |

|---|---|---|---|---|---|

ICMP |

ping |

Client |

DHCP |

Free IP checking (optional) |

|

7 |

TCP |

echo |

Client |

DHCP |

Free IP checking (optional) |

22 |

TCP |

SSH |

Target host |

Remote execution |

Run jobs |

22, 16514 |

TCP |

SSH SSH/TLS |

Compute Resource |

orcharhino originated communications, for compute resources in libvirt |

|

53 |

TCP and UDP |

DNS |

DNS Servers on the Internet |

DNS Server |

Resolve DNS records (optional) |

53 |

TCP and UDP |

DNS |

DNS Server |

orcharhino Proxy DNS |

Validation of DNS conflicts (optional) |

53 |

TCP and UDP |

DNS |

DNS Server |

Orchestration |

Validation of DNS conflicts |

68 |

UDP |

DHCP |

Client |

Dynamic IP |

DHCP (optional) |

80 |

TCP |

HTTP |

Remote repository |

Content Sync |

Remote repositories |

389, 636 |

TCP |

LDAP, LDAPS |

External LDAP Server |

LDAP |

LDAP authentication, necessary only if external authentication is enabled.

The port can be customized when |

443 |

TCP |

HTTPS |

orcharhino |

orcharhino Proxy |

orcharhino Proxy Configuration management Template retrieval OpenSCAP Remote Execution result upload |

443 |

TCP |

HTTPS |

Amazon EC2, Azure, Google GCE |

Compute resources |

Virtual machine interactions (query/create/destroy) (optional) |

443 |

TCP |

HTTPS |

orcharhino Proxy |

Content mirroring |

Initiation |

443 |

TCP |

HTTPS |

Infoblox DHCP Server |

DHCP management |

When using Infoblox for DHCP, management of the DHCP leases (optional) |

623 |

Client |

Power management |

BMC On/Off/Cycle/Status |

||

5900 – 5930 |

TCP |

SSL/TLS |

Hypervisor |

noVNC console |

Launch noVNC console |

5985 |

TCP |

HTTP |

Client |

WinRM |

Configure Client running Windows |

5986 |

TCP |

HTTPS |

Client |

WinRM |

Configure Client running Windows |

7911 |

TCP |

DHCP, OMAPI |

DHCP Server |

DHCP |

The DHCP target is configured using ISC and |

8443 |

TCP |

HTTPS |

Client |

Discovery |

orcharhino Proxy sends reboot command to the discovered host (optional) |

9090 |

TCP |

HTTPS |

orcharhino Proxy |

orcharhino Proxy API |

Management of orcharhino Proxies |

Firewall Configuration

orcharhino Server uses firewalld.

The firewall is automatically set up and configured when installing or upgrading orcharhino.

You can run firewall-cmd --state to view the current state of the firewall.

For more information, see Configuring the Firewall on orcharhino.

orcharhino Appliance Requirements

If you perform an orcharhino appliance installation, you require the following:

-

A VMware vSphere environment in version 6.0 or later.

-

The orcharhino OVA image, which you can request on orcharhino.com/try-me/anmeldung-zum-orcharhino-download or find in the ATIX Service Portal. Please contact us if you have any questions.

Kickstart Requirements

If you are performing a Kickstart installation, you require the following:

-

A Kickstart file

If you have an orcharhino subscription, you will receive your orcharhino Subscription Key and the required download links in your initial welcome email. If you have not received your welcome email, please contact us.

-

An

.isoimage-

AlmaLinux 8 from almalinux.org

-

Oracle Linux 8 from oracle.com.

-

Rocky Linux 8 from rockylinux.org

-

ATIX AG maintains different Kickstart files depending on the operating system, hard drives, and firmware you are using:

-

AlmaLinux 8 with SATA disks (

/dev/sdX) with EFI/bootpartition:orcharhino_alma_el8_sdX_efi.ks -

AlmaLinux 8 with SATA disks (

/dev/sdX):orcharhino_alma_el8_sdX.ks -

AlmaLinux 8 with VirtIO disks (

/dev/vdX) with EFI/bootpartition:orcharhino_alma_el8_vdX_efi.ks -

AlmaLinux 8 with VirtIO disks (

/dev/vdX):orcharhino_alma_el8_vdX.ks -

Oracle Linux 8 with SATA disks (

/dev/sdX) with EFI/bootpartition:orcharhino_oracle_el8_sdX_efi.ks -

Oracle Linux 8 with SATA disks (

/dev/sdX):orcharhino_oracle_el8_sdX.ks -

Oracle Linux 8 with VirtIO disks (

/dev/vdX) with EFI/bootpartition:orcharhino_oracle_el8_vdX_efi.ks -

Oracle Linux 8 with VirtIO disks (

/dev/vdX):orcharhino_oracle_el8_vdX.ks -

Rocky Linux 8 with SATA disks (

/dev/sdX) with EFI/bootpartition:orcharhino_rocky_el8_sdX_efi.ks -

Rocky Linux 8 with SATA disks (

/dev/sdX):orcharhino_rocky_el8_sdX.ks -

Rocky Linux 8 with VirtIO disks (

/dev/vdX) with EFI/bootpartition:orcharhino_rocky_el8_vdX_efi.ks -

Rocky Linux 8 with VirtIO disks (

/dev/vdX):orcharhino_rocky_el8_vdX.ks

Appliance Installation Steps

You can use the orcharhino appliance to install orcharhino Server in your VMware environment. These instructions presume prior experience using VMware’s vSphere client. The orcharhino appliance contains a parameterised but otherwise preconfigured AlmaLinux 8 base system that significantly simplifies the deployment and installation process.

-

Download the orcharhino OVA image to your local client machine.

-

Open the VMware vSphere client in a browser on your local client machine.

-

Right-click on your datacenter, cluster, or host and select Deploy OVF Template.

-

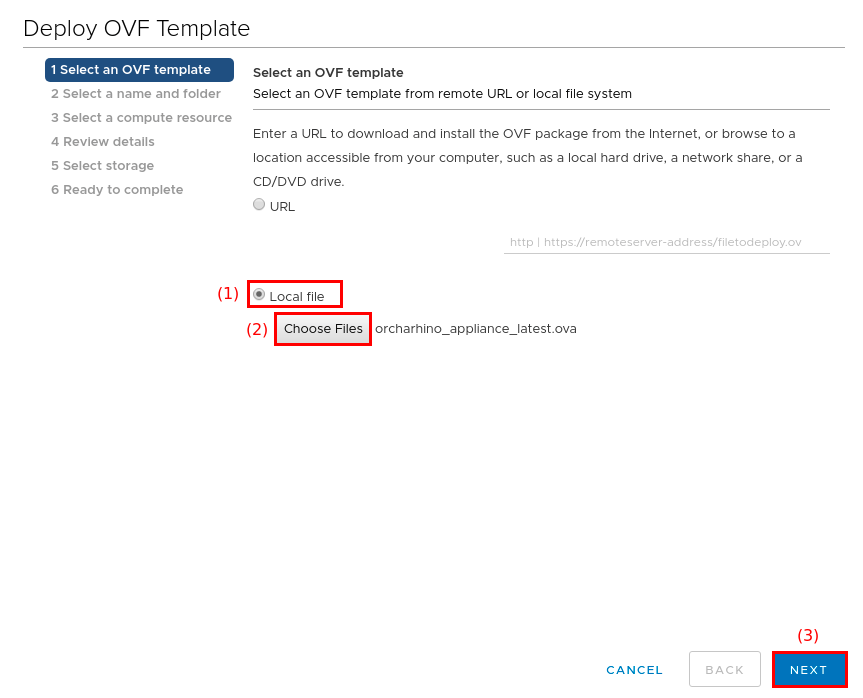

On the Select an OVF template screen:

-

Select Local file (1).

ATIX AG does not recommend to enter the OVA download link directly.

-

Click Choose Files (2) and select the previously downloaded orcharhino OVA image.

-

Click Next (3) to continue.

-

-

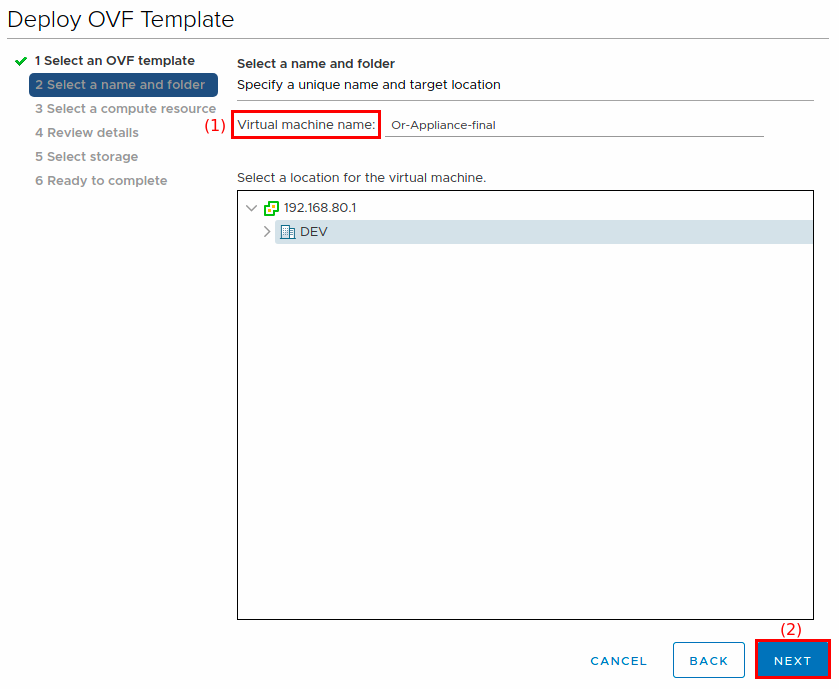

On the Select a name and folder screen:

-

Enter the Virtual machine name (1) for your orcharhino. If in doubt, use the FQDN or the host name you want to use for your orcharhino.

-

Select a location for the new virtual machine.

-

Click Next (2) to continue.

-

-

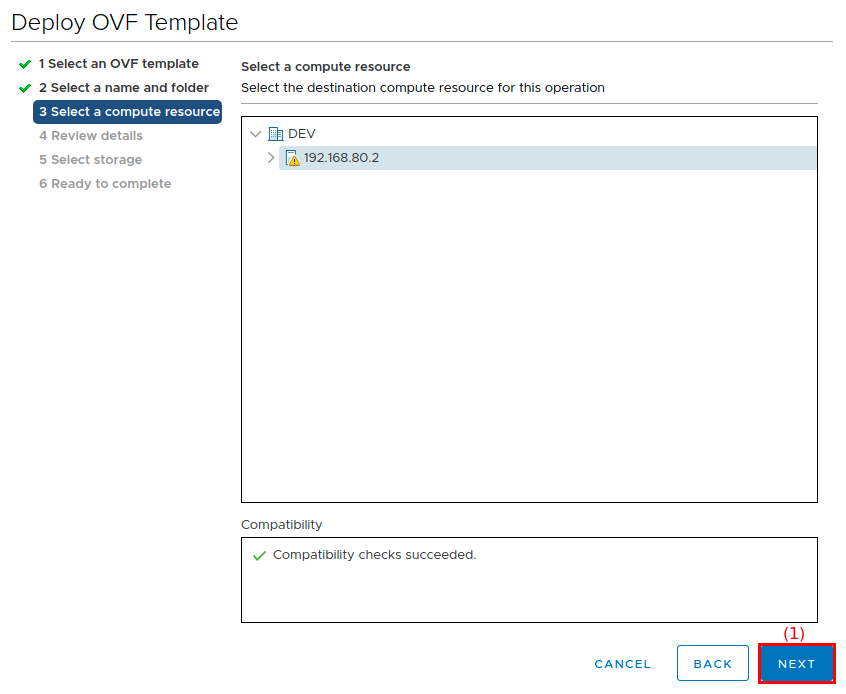

On the Select a compute resource screen:

-

Select a host or cluster.

-

Click Next (1) to continue.

-

-

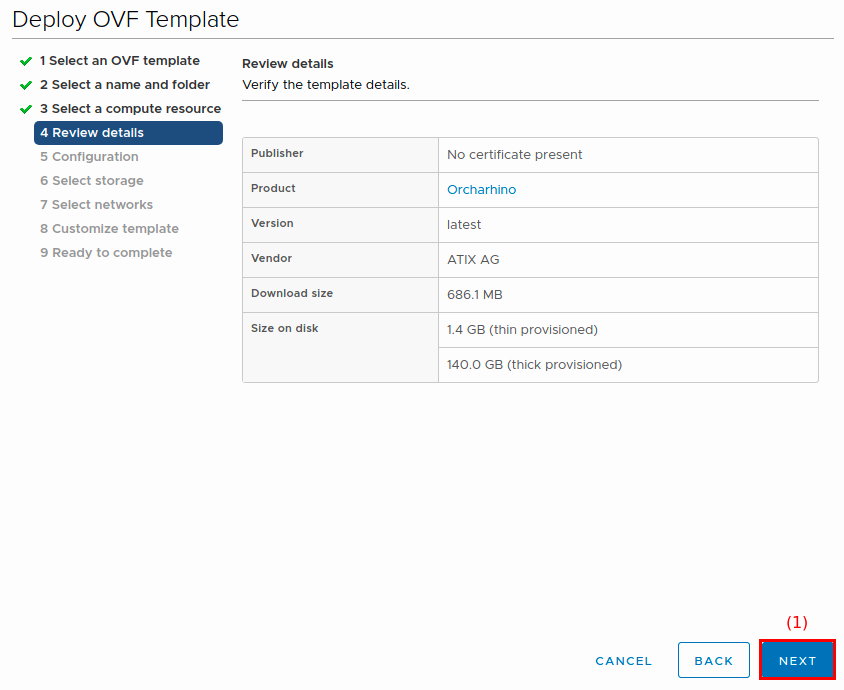

On the Review details screen:

-

Review your settings.

-

Click Next (1) to continue.

-

-

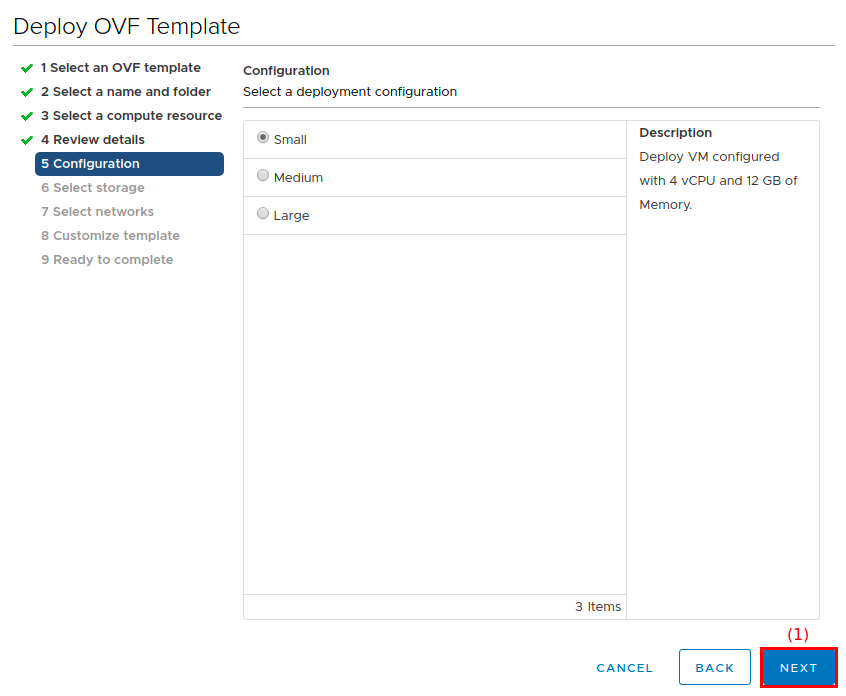

On the Configuration screen:

-

Choose the size for your orcharhino instance by looking at the description of each option.

-

Click Next (1) to continue.

-

-

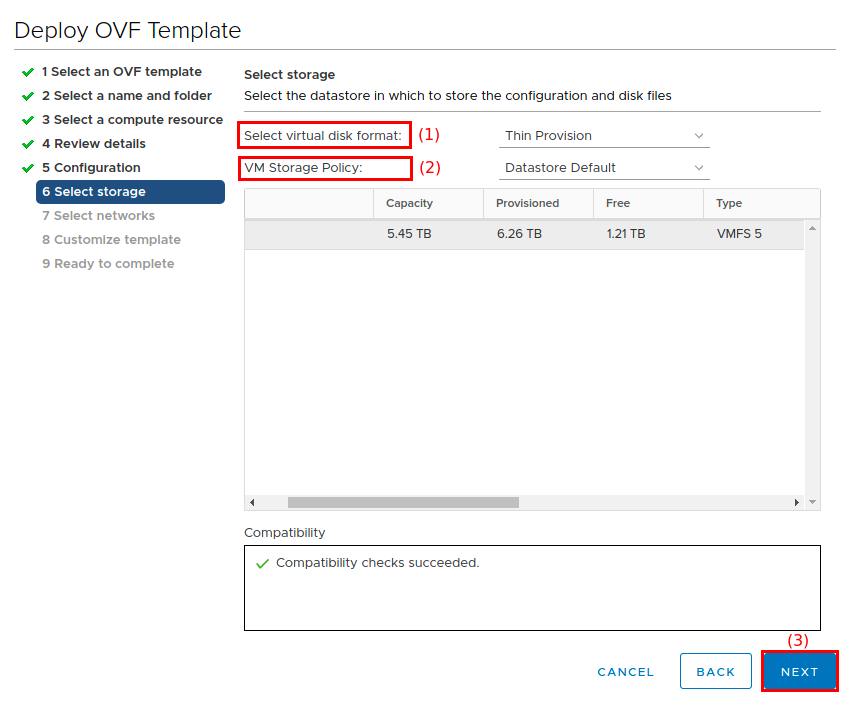

On the Select storage screen:

-

Select a hard disk format for the Select virtual disk format field (1).

Choosing thick provision takes considerably longer to allocate storage space.

-

Choose a data store or data store cluster in the VM Storage Policy field (2).

-

Click Next (3) to continue.

-

-

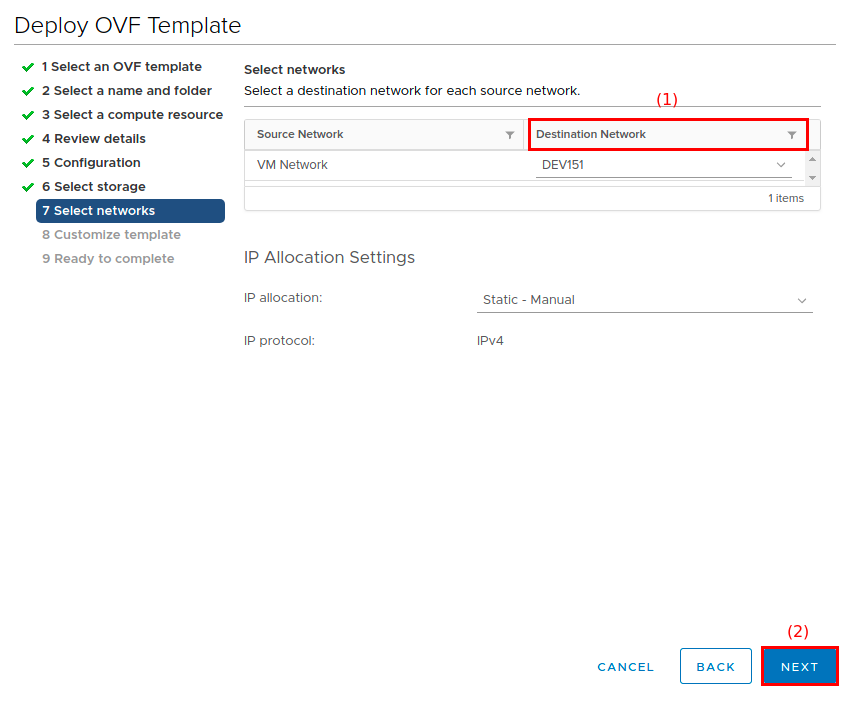

On the Select networks screen:

-

Select an available network from the Destination Network field (1).

Do not change the default values under IP Allocation Settings.

For IP allocation, leave the default value of static - manual even if you want to use DHCP. DHCP and IP allocation are configured during the next step.

-

Click Next (2) to continue.

-

-

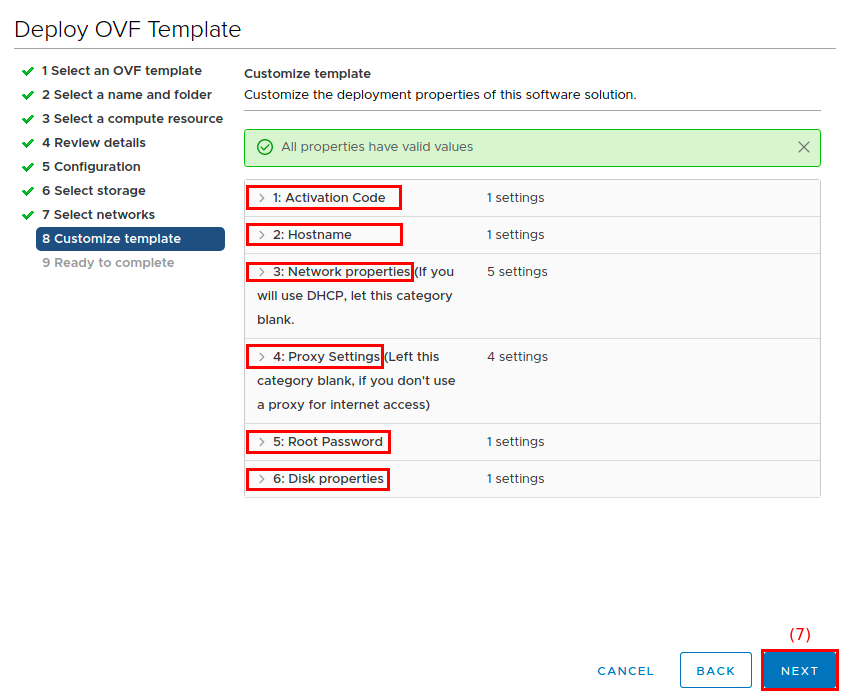

On the Customize template screen:

-

Enter your orcharhino Subscription Key in the field provided in the Subscription Key foldout menu (1).

-

For the Hostname foldout menu (2), enter the FQDN of your orcharhino.

The provided FQDN must contain both a host name and domain name part. That is, it must contain at least one dot, for example

orcharhino.example.com. -

If you do not already have a DHCP service for this network and allow orcharhino to manage DHCP in the network, complete all fields from the Network Properties foldout menu (3).

-

If your organization uses an HTTP/HTTPS proxy to access the internet, complete all fields from the Proxy Settings foldout menu (4).

-

Set the root password of your orcharhino host in the Root Password foldout menu (5). If you leave this field blank, the root password defaults to

atix. Ensure to set a strong root password. -

Set the size of your dynamic hard drive in the Disk Properties foldout menu (6). This hard drive is added to the logical volume containing the

/varpartition, which houses your content repositories. The default value is set to 50 GiB. For more information, see system requirements. -

Use the Customer CA field to upload a custom CA certificate in

PEMformat. This is necessary if your HTTPS proxy uses a self-signed certificate that is not trusted by a global root CA. -

Click Next (9) to continue.

-

-

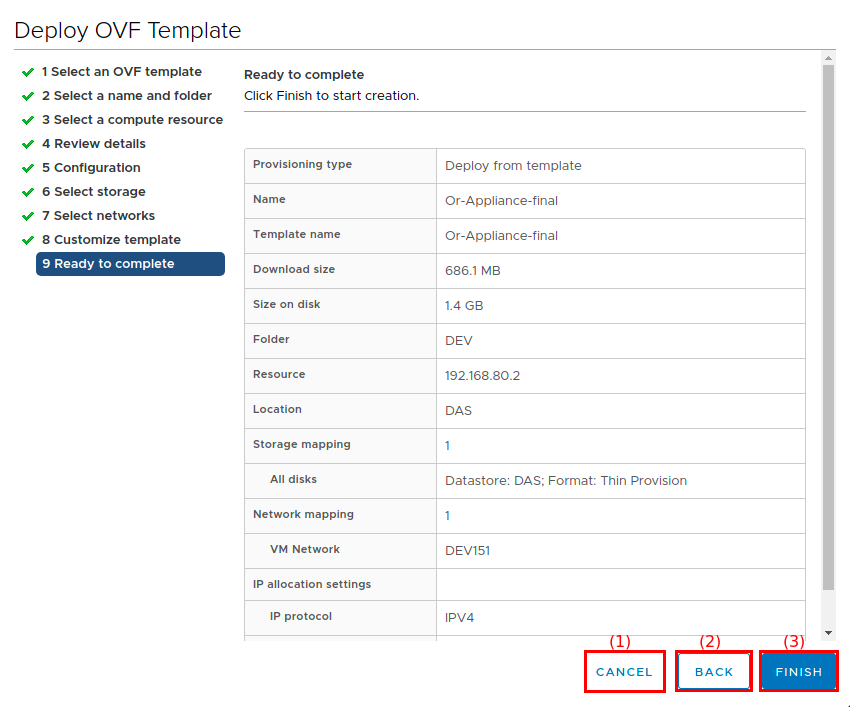

On the Ready to complete screen:

-

Click Cancel (1) to cancel the entire installation process.

-

Click Back (2) to review your settings.

-

Click Finish (3) to start the deployment.

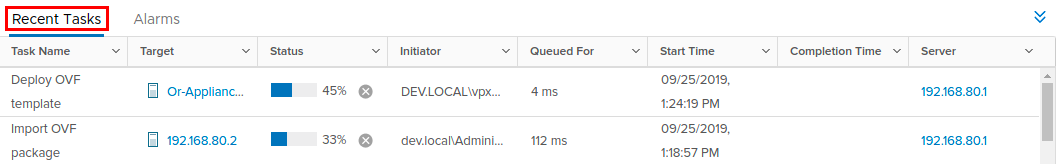

You can track the progress of your deployment under Recent Tasks in your vSphere client:

-

-

After the deployment is complete, select the new orcharhino VM in your VMware inventory and click on Launch Remote Console or Launch Web Console.

-

Click ACTIONS > Power > Power On to start your new VM. This automatically starts the orcharhino installation in the console you opened in step 13.

After your orcharhino appliance is successfully registered with ATIX AG, a link to the orcharhino Installer GUI is displayed in the console from step 13.

-

Enter the link in your browser and continue with the orcharhino Installer GUI.

Kickstart Installation Steps

You can use the Kickstart files provided by ATIX AG to install AlmaLinux 8, Oracle Linux 8, or Rocky Linux 8 on the host orcharhino will run on.

-

Either virtually mount your installation media or place its physical equivalent in the DVD tray for a bare-metal installation.

-

Boot your system from the installation media. Immediately halt the boot process by pressing the tab key and append the following boot option:

ks=http://example.com/path/to/kickstart_file.ks -

Press enter to start the automatic Kickstart installation. The installation process prompts you to press enter from time to time.

-

After the Kickstart installation has successfully completed, run the install_orcharhino.sh script.

Installing orcharhino Server Using the install_orcharhino.sh Script

You can use install_orcharhino.sh to start the installation process.

The script registers your orcharhino Server with ATIX AG and starts the orcharhino Installer GUI.

The Kickstart installation places the install_orcharhino.sh script in the /root/ directory on your orcharhino Server.

Alternatively, download the script directly from ATIX AG.

|

If you have an orcharhino subscription, you will receive your orcharhino Subscription Key and the required download links in your initial welcome email. If you have not received your welcome email, please contact us. |

The install_orcharhino.sh script supports multiple options and requires your orcharhino Subscription Key.

Run ./install_orcharhino.sh --help for a full list of options and usage instructions.

-

Start the installation process:

$ /root/install_orcharhino.sh --name="orcharhino.example.com" My_orcharhino_Subscription_KeyATIX AG recommends using the

--nameoption to provide your orcharhino Server with a FQDN at this point. Ensure that you do not use any capital letters in your FQDN. -

Confirm your settings to register with ATIX AG as follows:

install_orcharhino.sh: You are about to register to OCC using the following settings: install_orcharhino.sh: orcharhino Subscription Key: 'My_orcharhino_Subscription_Key' install_orcharhino.sh: orcharhino FQDN: 'orcharhino.example.com' install_orcharhino.sh: orcharhino IP address: 'My_orcharhino_Server_IP_Address' install_orcharhino.sh: Proceed with these settings? [Yes/No]If you enter anything other than

Yes,yes,Y, ory, the script exits without taking any further actions. You can always rerun the script with modified options. You can override theMy_orcharhino_Server_IP_Addresssetting using the-i/--ip-addr=ADDRoption. You can skip the above confirmation prompt using the-y/--yesoption. -

Access the link to continue with the orcharhino Installer GUI:

http://My_orcharhino_Server_IP_Address:8015/?token=4f27b9328cc0ead7d499c93f34ec9bda5d26e7b50c4420dc0a80dcc04adcf9ddThe installation process takes time depending on your environment.

Unattended orcharhino Installation

You can install orcharhino Server without user interaction using --skip-gui.

This requires a valid /etc/orcharhino-installer/answers.yaml file.

This method is an advanced installation method.

-

Set

use_custom_certstotruein/etc/orcharhino-installer/answers.yaml. -

Place your

custom_certs.ca,custom_certs.crt, andcustom_certs.keyinto/etc/orcharhino-installer/.

orcharhino Installer GUI

Continue with the orcharhino Installer GUI to finish your orcharhino Server installation process.

-

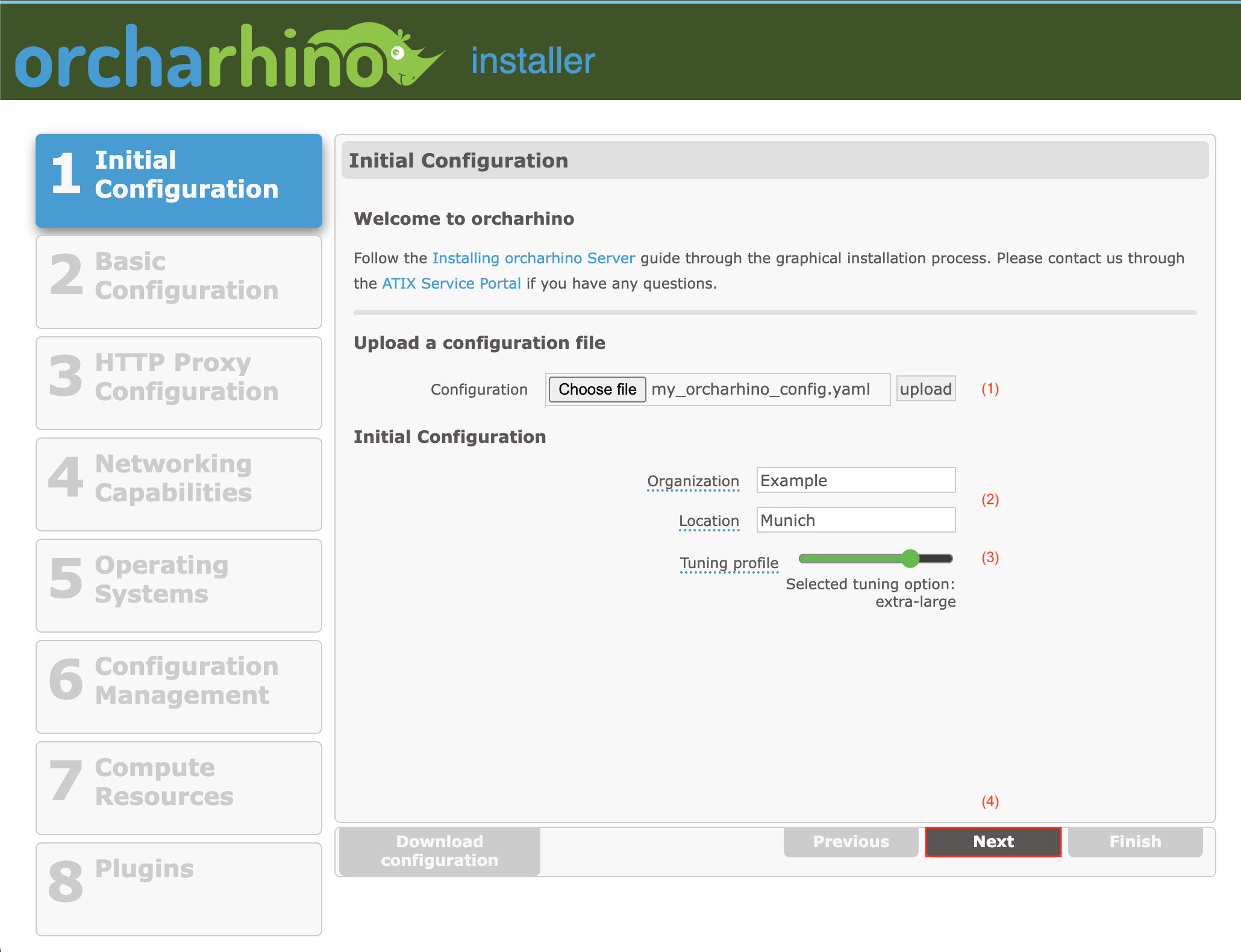

On the Initial Configuration screen:

-

Click Choose file and upload (1) to upload an

answers.yamlfile to prefill the orcharhino Installer GUI. -

Enter the initial Organization and Location context (2) for your orcharhino. You can add additional organizations and locations later.

One way to distinguish between orcharhino administrators and regular users is to place your orcharhino Server and any attached orcharhino Proxies into a separate location and/or organization context.

Alternatively, you can achieve a fine grained permissions concept using roles and filters.

Creating an Organization or Location that contains white space or non ASCII characters has been known to cause bugs. Please choose a single word that does not include umlauts or special characters.

-

Select a Tuning profile (3) using the slider. The tuning profile ensures that your orcharhino makes best use of its available resources of your orcharhino host. The prefilled value is based on the resources of the host. For more information, see Tuning orcharhino.

-

Click Next (4) to continue.

-

-

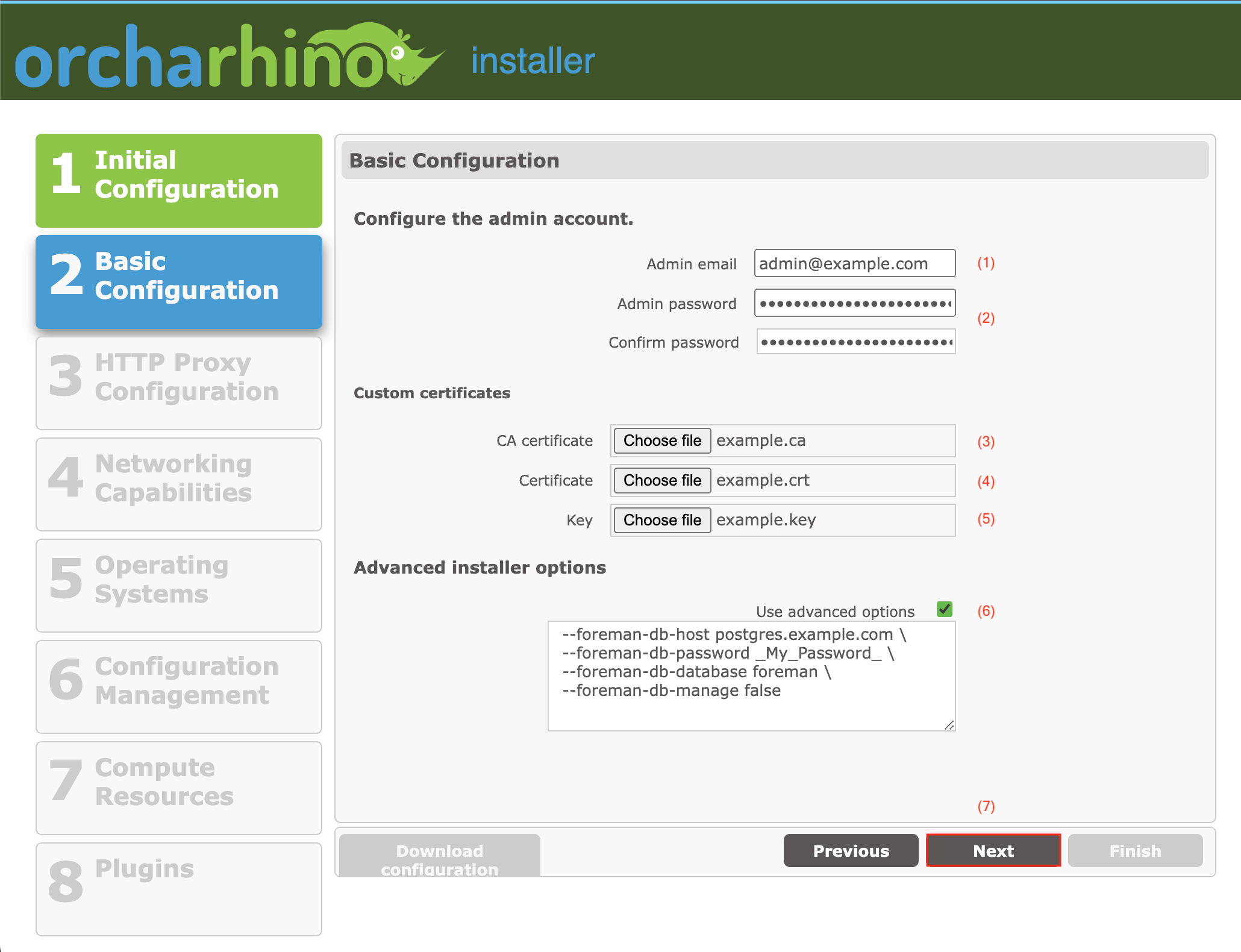

On the Basic Configuration screen:

-

Enter a valid email address that can be used to reach the orcharhino administrator into the Admin email field (1).

-

Enter the password for your orcharhino

adminaccount (2). -

Optional: You can use custom certificates on orcharhino.

-

Click Choose file to upload a custom CA certificate (

.ca) file (3). -

Click Choose file to upload a custom certificate (

.crt) file (4). -

Click Choose file to upload a custom key (

.key) file (5).

-

-

Optional: Select Use advanced options (6) to provide advanced installer options. For example, you can use this to define an external database with orcharhino. ATIX AG considers this is an advanced feature. If you are unsure, leave the field empty or contact us.

-

Click Next (7) to continue.

-

-

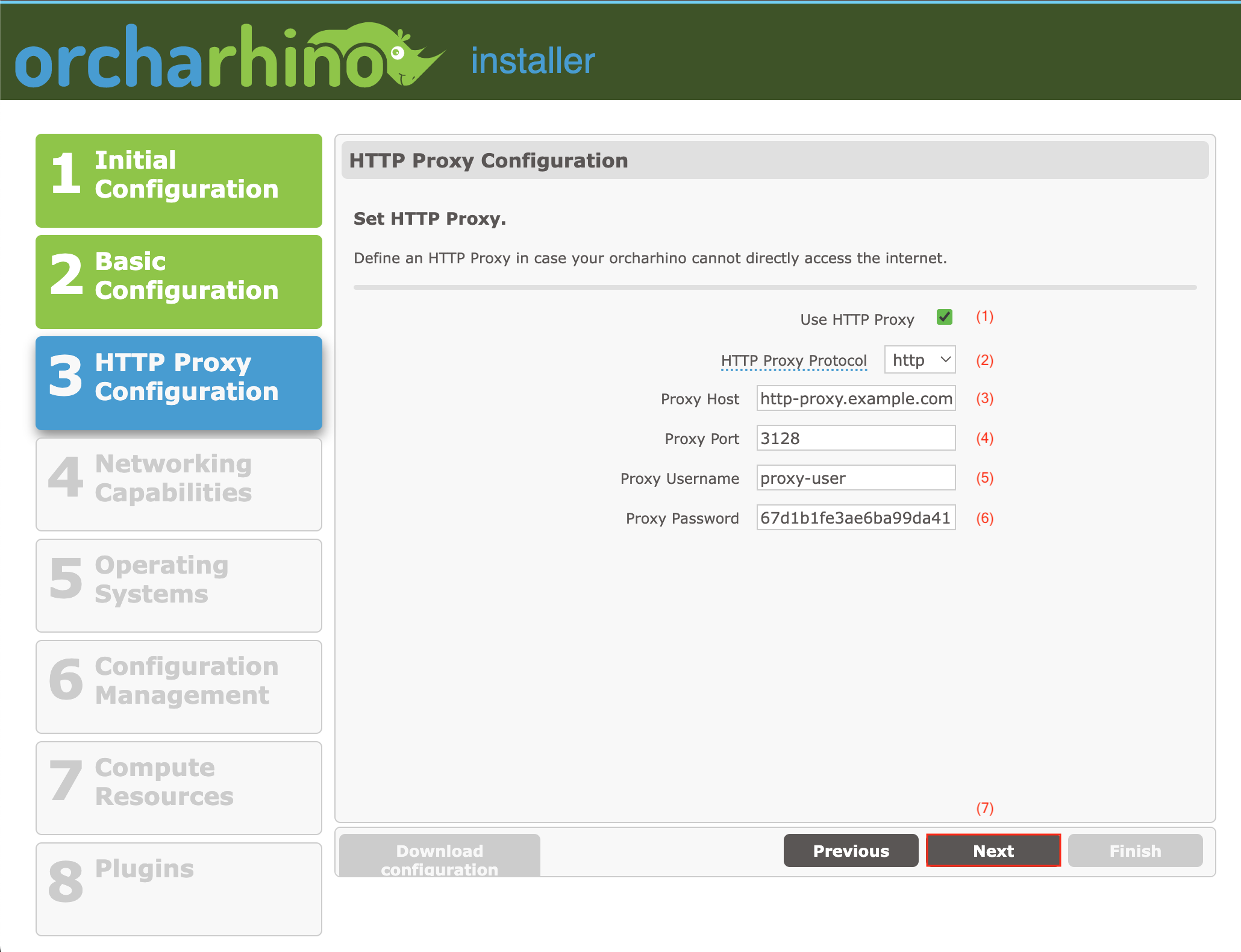

On the HTTP Proxy Configuration screen:

-

If your organization uses an HTTP proxy, select Use HTTP Proxy (1). Enter your HTTP/HTTPS proxy configuration data in the fields provided (2-6).

Ensure that your HTTPS proxy does not modify the used certificates.

-

Click Next (7) to continue.

-

-

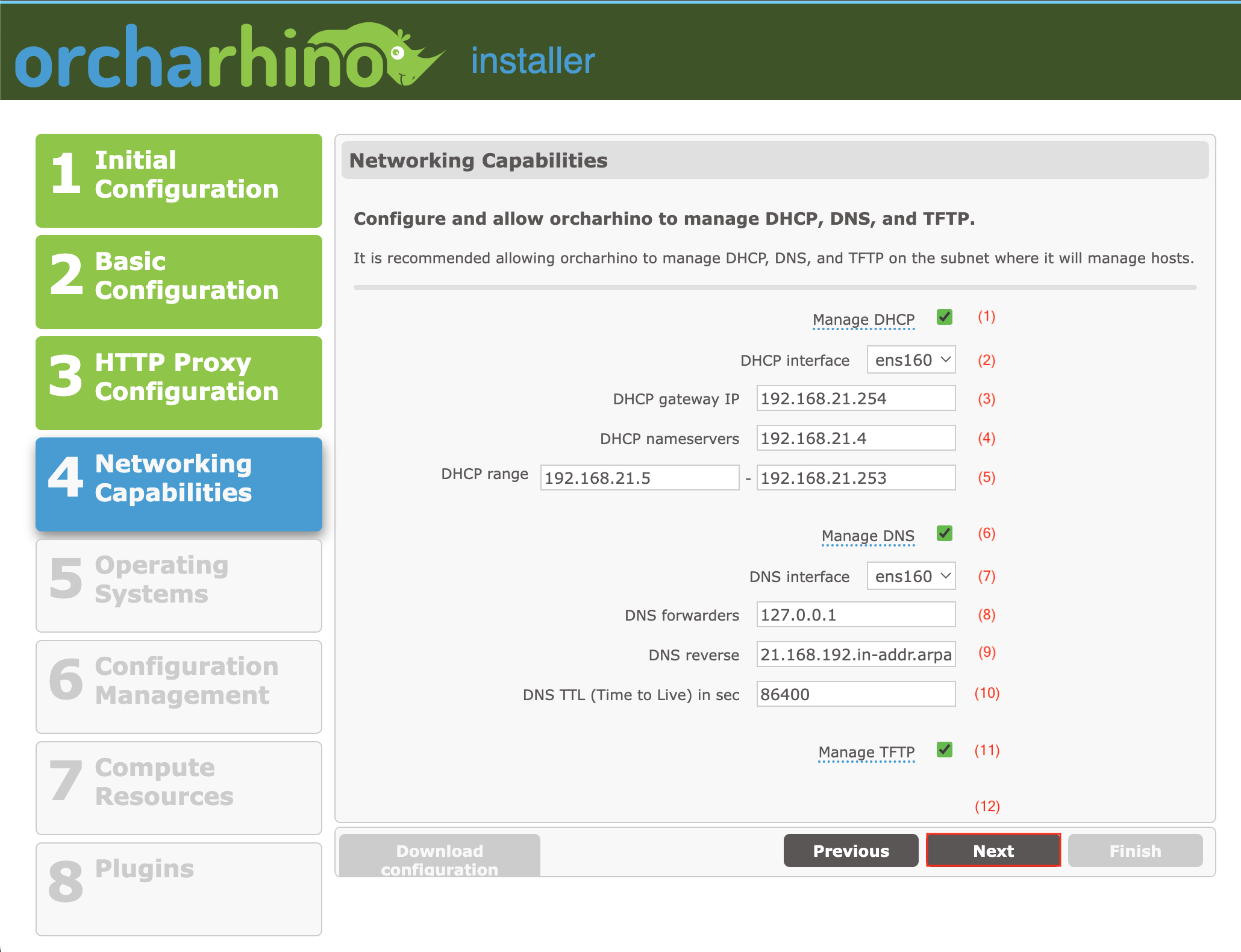

On the Networking Capabilities screen:

ATIX AG recommends allowing orcharhino to manage DHCP, DNS, and TFTP on the subnet it manages hosts. Follow all of the steps below for this setup. However, if there is a good reason, then deselect the corresponding services (1), (6), and/or (11) and disregard steps (2a), (2b), and/or (2c).

-

Configure orcharhino’s DHCP capability (1).

-

For the DHCP interface field (2), select the interface to the network orcharhino deploys hosts to. ATIX AG refers to this network as the internal network hence forth.

The orcharhino Installer GUI prefills the input fields (3), (4), and (5) with plausible values based on your DHCP interface selection. Ensure to double check these auto-generated values before you continue.

-

In the DHCP gateway IP field (3), enter the gateway IP address that managed hosts use on the internal network. The auto-generated value is the default gateway on the selected interface.

-

In the DHCP nameservers field (4), enter the IP address that managed hosts use to resolve DNS queries. If Manage DNS (6) is selected, this is the IP address of the orcharhino host on the internal interface.

-

For the DHCP range field (5), enter the range of IP addresses that is available for managed hosts on the internal network. The installer calculates the largest free range within the IP network by excluding its own address and those of the gateway and name server. It does not verify if any other hosts already exist within this range.

-

-

Configure orcharhino’s DNS capability (6).

-

For the DNS interface field (7), select the interface to the internal network which you used for the DHCP interface field (2).

The orcharhino Installer GUI prefills the input fields (8) and (9) with plausible values based on your DNS interface selection and the systems

resolv.conffile. Ensure to double check these auto-generated values before you continue. -

In the DNS forwarders field (8), enter the IP address of your DNS server. The installer pre-fills this field with a DNS server from the system’s

resolv.conffile. -

In the DNS reverse field (9), enter the net ID part of the IP address of the internal network in decimal notation, with the byte groups in reverse order, followed by

.in-addr-arpa. For example, a network address of192.168.0.0/24would turn into0.168.192.in-addr-arpa; pre-filled with the appropriate value for the chosen interface. -

In the DNS TTL (Time to Live) in sec field (10), enter a value in seconds.

-

-

Click Manage TFTP (11) to allow orcharhino Server to manage TFTP within its network.

-

Click Next (12) to continue.

-

-

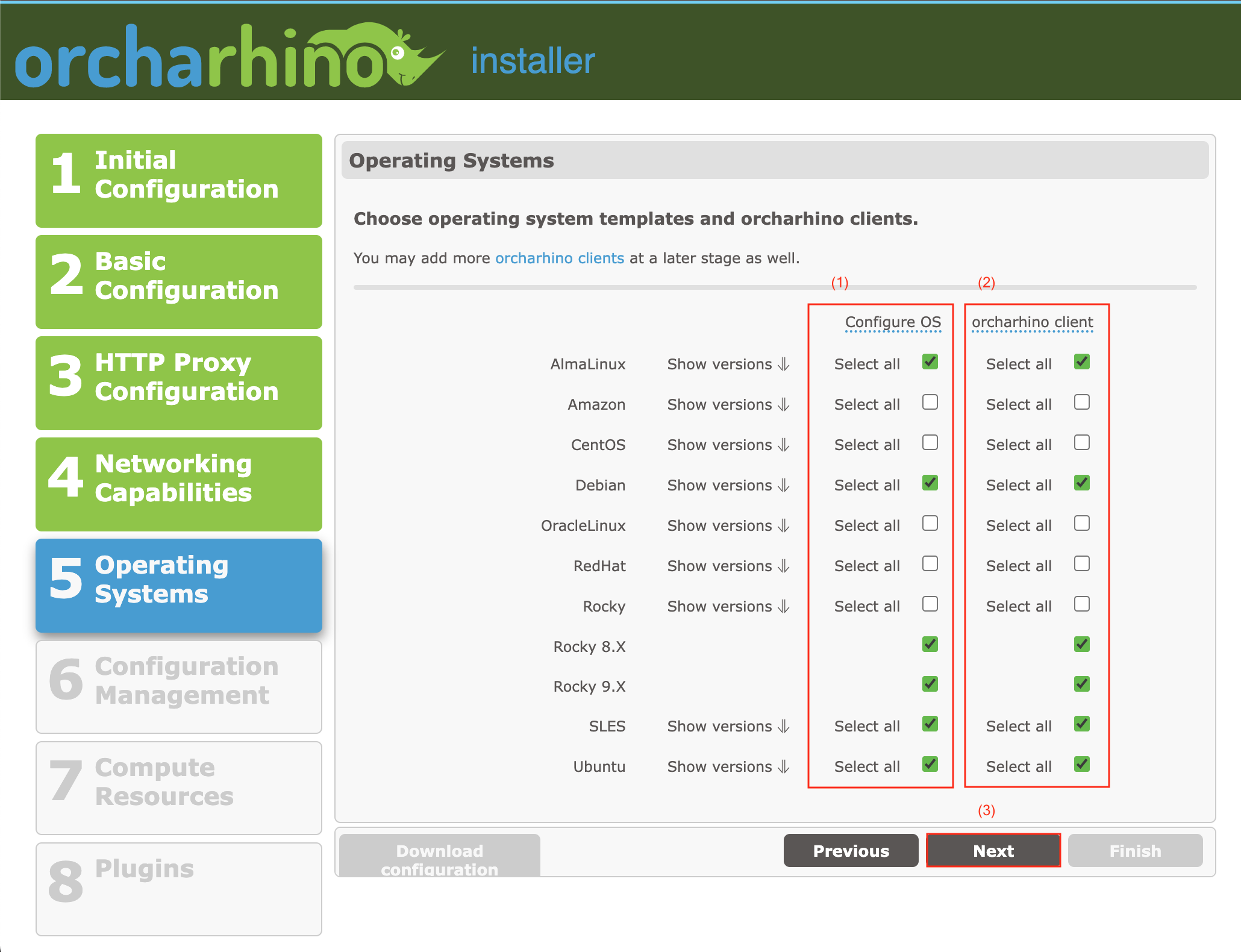

On the Operating Systems screen:

-

In the Configure OS column (1), preconfigure orcharhino with a selection of operating systems. The installer automatically configures the operating system entries, the installation media entries, and the provisioning templates for any selected operating systems.

You cannot select any Red Hat operating systems to be preconfigured by orcharhino because you need a valid subscription manifest file from Red Hat. For more information, see Managing Red Hat Subscriptions.

If you want to deploy hosts running SUSE Linux Enterprise Server, you need to perform additional steps at the end of the installation process to set up your SLES installation media.

-

orcharhino automatically synchronizes the orcharhino Client repositories for any operating system you select in the orcharhino Client column (2).

-

ATIX AG recommends selecting both the operating system and orcharhino Client configuration for your operating systems at the same time.

-

You can set a list of orcharhino Clients in

/etc/orcharhino-ansible/or_operating_systems_vars.yamland run/opt/orcharhino/automation/play_operating_systems.shon your orcharhino Server to configure operating systems and add orcharhino Clients at a later stage. -

Click Next (3) to continue.

-

-

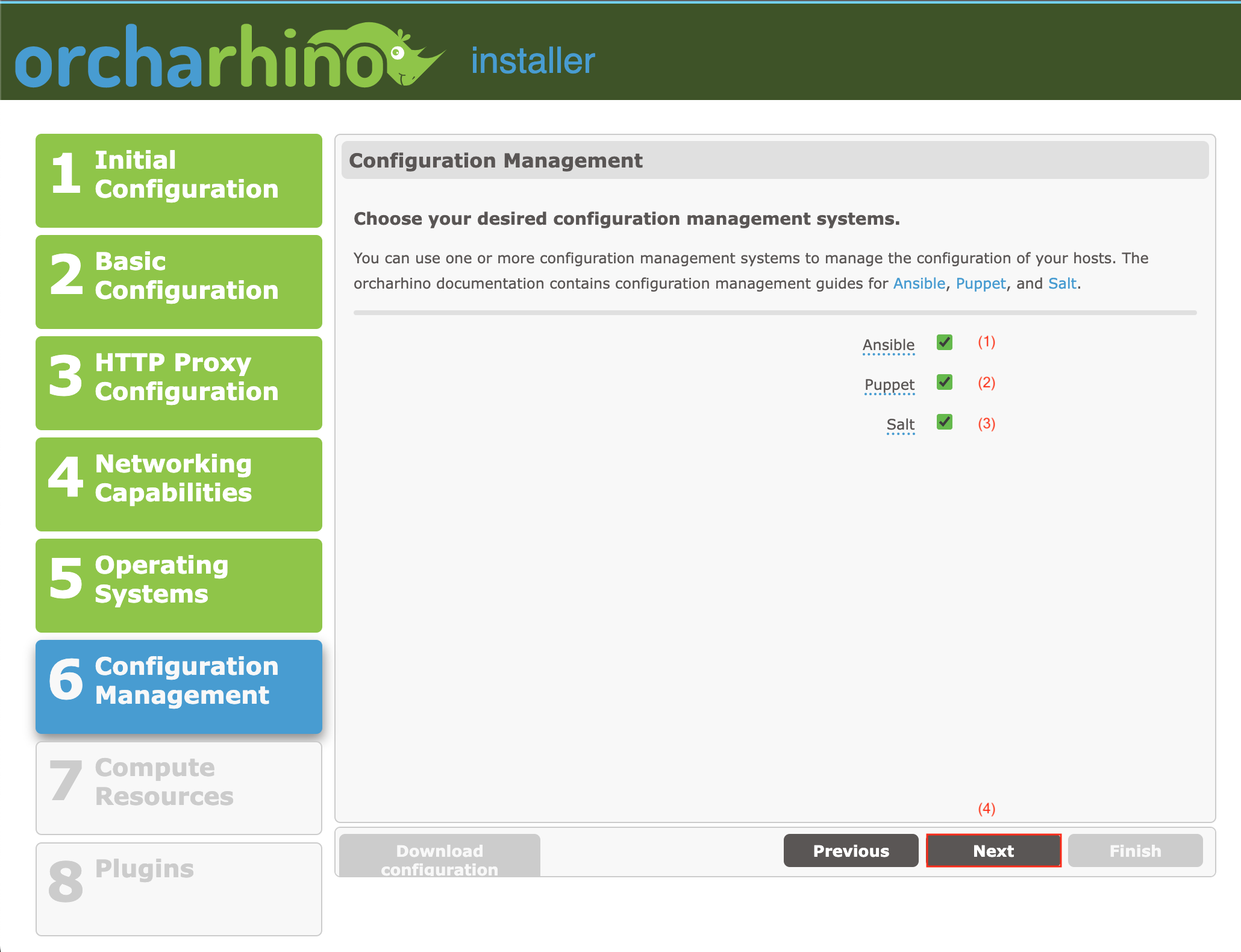

On the Configuration Management screen:

-

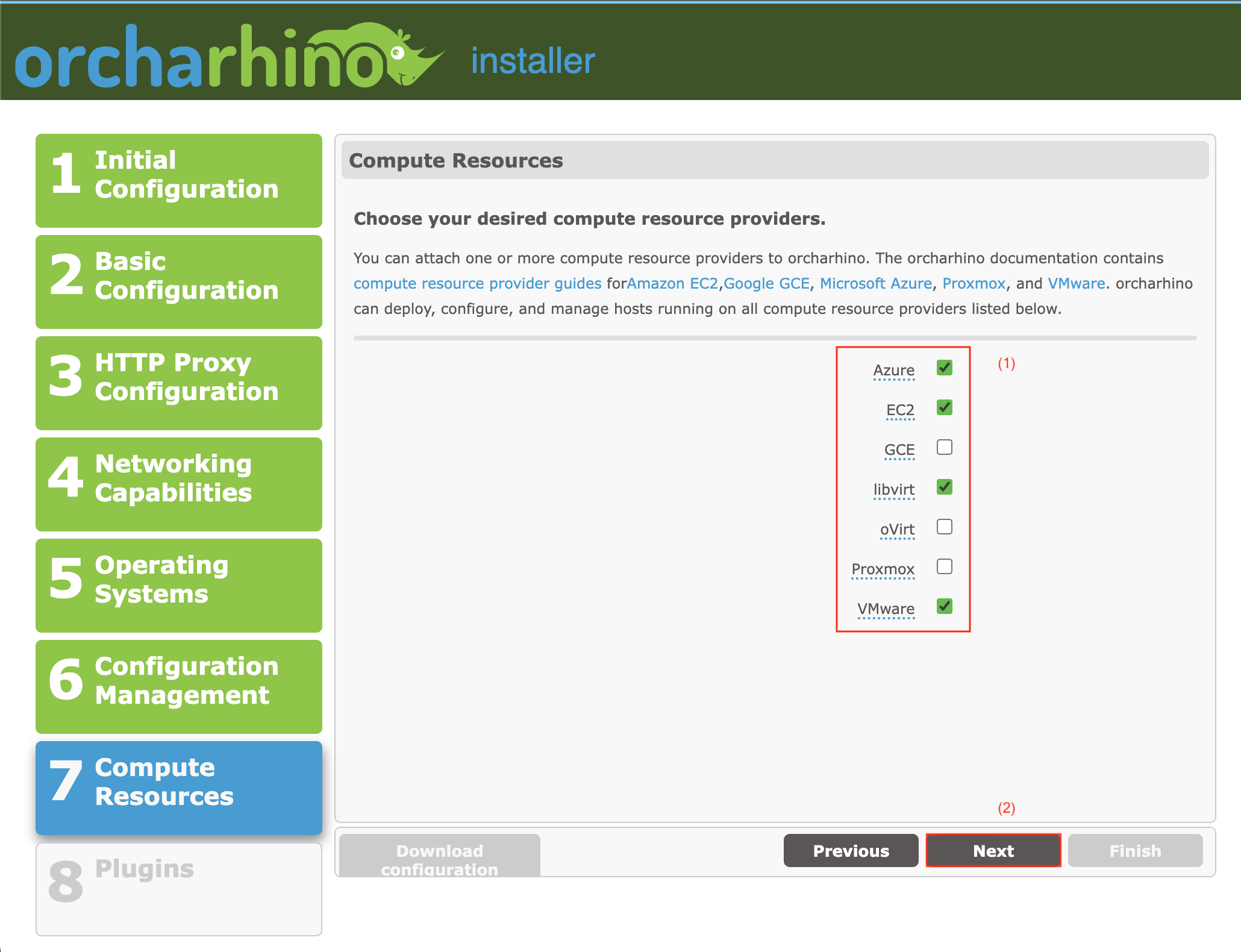

On the Compute Resources screen:

-

Choose Compute Resource Plug-ins (1) to install compute resource plug-ins to your orcharhino. For more information on how to attach those compute resources, see Amazon EC2, Google GCE, Microsoft Azure, Proxmox, and VMware.

-

Click Next (2) to continue.

-

-

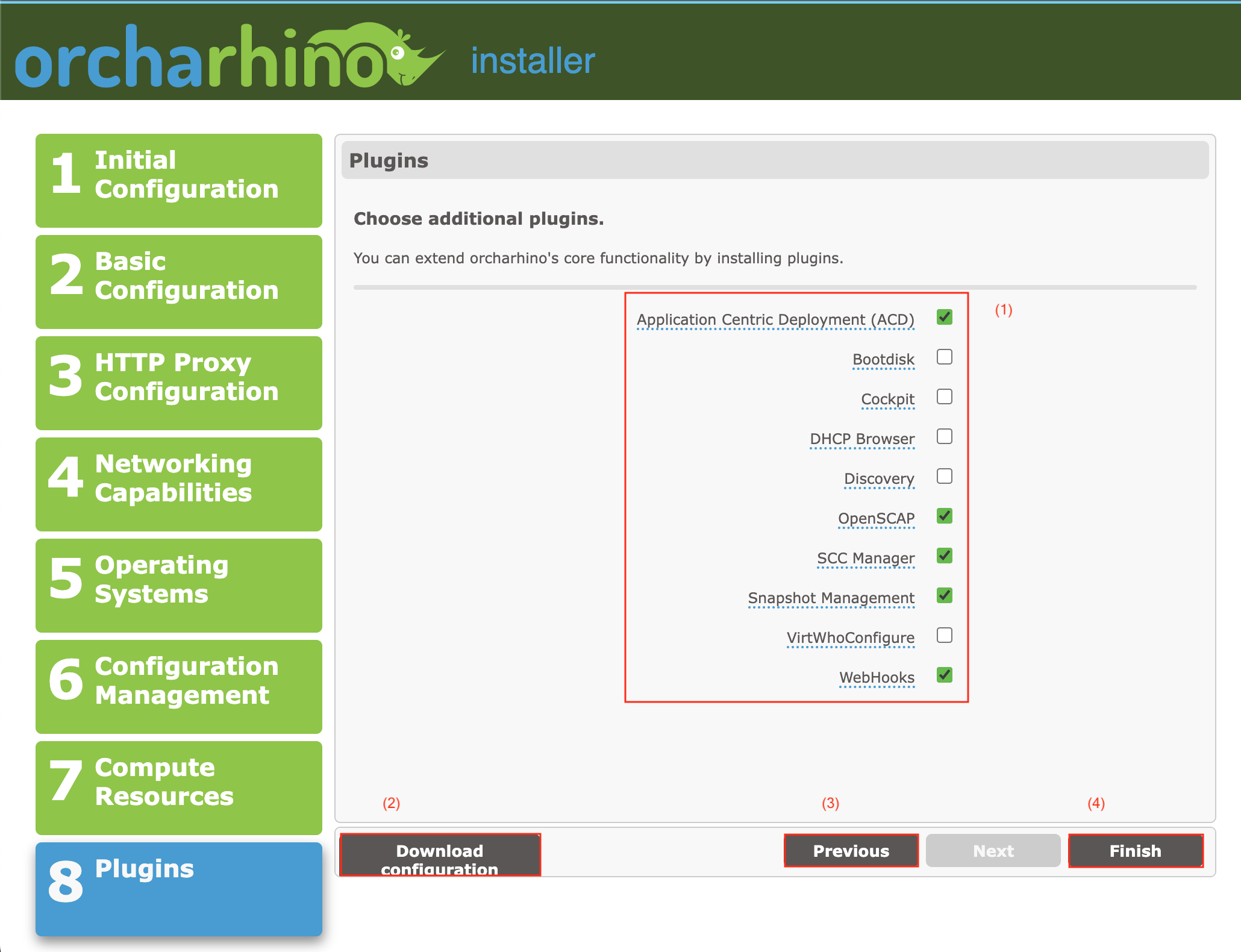

On the Plug-ins screen:

-

Select the Plug-ins (1) you want to install on your orcharhino Server. You can also install plug-ins at a later stage.

-

Click Download configuration (2) to download the

answers.yamlfile based on your settings within orcharhino Installer GUI to your local machine. You can use this file to recreate your inputs for another orcharhino Server installation. The downloaded file does not contain any uploaded custom certificates. -

Click Previous (3) to review your configuration.

-

Click Finish (4) to start the installation process. This displays console output in the browser window and takes time depending on your environment.

-

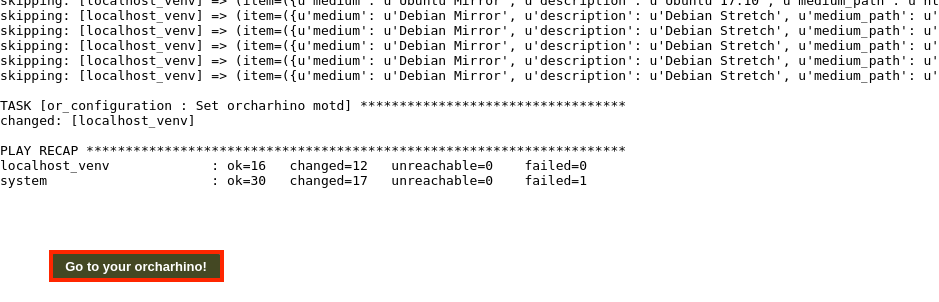

After your orcharhino Server is successfully installed, the orcharhino Installer GUI displays output similar to the following:

-

Click Go to your orcharhino! to log in to your orcharhino.

-

Log in using the

adminuser along with the password you have set up during step 2 above.

Enjoy your brand new orcharhino installation! If you are unsure how to continue, have a look at the first steps guide.

Configuring pull-based transport for remote execution

By default, remote execution uses push-based SSH as the transport mechanism for the Script provider. If your infrastructure prohibits outgoing connections from orcharhino to hosts, you can use remote execution with pull-based transport instead, because the host initiates the connection to orcharhino. The use of pull-based transport is not limited to those infrastructures.

The pull-based transport comprises pull-mqtt mode on orcharhino Proxies in combination with a pull client running on hosts.

|

The |

-

Enable the pull-based transport on your orcharhino:

$ orcharhino-installer --foreman-proxy-plugin-remote-execution-script-mode=pull-mqtt -

Configure the firewall to allow the MQTT service on port 1883:

$ firewall-cmd --add-service=mqtt -

Make the changes persistent:

$ firewall-cmd --runtime-to-permanent -

In

pull-mqttmode, hosts subscribe for job notifications to either your orcharhino Server or any orcharhino Proxy Server through which they are registered. Ensure that orcharhino Server sends remote execution jobs to that same orcharhino Server or orcharhino Proxy Server:-

In the orcharhino management UI, navigate to Administer > Settings.

-

On the Content tab, set the value of Prefer registered through orcharhino Proxy for remote execution to Yes.

-

-

Configure your hosts for the pull-based transport. For more information, see Transport modes for remote execution in Managing Hosts.

Setting a Tuning Profile

You can set a tuning profile to make the best use of powerful orcharhino hosts.

-

On your orcharhino Server, set a tuning profile:

$ orcharhino-installer --tuning My_Tuning_ProfileYou can choose between default as the smallest option, medium, large, extra-large, and extra-extra-large.

| Tuning Profile | Required Computing Power |

|---|---|

medium |

32 GiB of memory and 8 CPU cores |

large |

64 GiB of memory and 16 CPU cores |

extra-large |

128 GiB of memory and 32 CPU cores |

extra-extra-large |

256 GiB of memory and 48 CPU cores |

Resetting SSL Certificates

Resetting the SSL certificates removes changes made to the original self-signed certificates created during the installation. You can recover an incorrectly updated SSL certificate without reverting to a previous backup or snapshot.

-

On your orcharhino Server, reset the existing certificates:

$ orcharhino-installer --certs-reset

Setting the Host Name

-

On your orcharhino Server, set the host name:

$ katello-change-hostname _My_Host_Name_ -u _My_Username_ -p _My_Password_

Synchronizing the system clock with chronyd

To minimize the effects of time drift, you must synchronize the system clock on the base operating system on which you want to install orcharhino with Network Time Protocol (NTP) servers. If the base operating system clock is configured incorrectly, certificate verification might fail.

-

Install the

chronypackage:$ dnf install chrony -

Start and enable the

chronydservice:$ systemctl enable --now chronyd

|

The text and illustrations on this page are licensed by ATIX AG under a Creative Commons Attribution Share Alike 4.0 International ("CC BY-SA 4.0") license. This page also contains text from the official Foreman documentation which uses the same license ("CC BY-SA 4.0"). |